Binary code is the language of computers, representing data and instructions using only two symbols: 0 and 1.

Table of Contents

ToggleIt is the foundation of all digital technology and forms the basis of how computers store, process, and transmit information.

Each digit in binary code is called a “bit“. which is a contraction of “binary digit.” Just as in our familiar decimal system, where each digit represents a power of 10 (1, 10, 100, etc.), in binary, each digit represents a power of 2 (1, 2, 4, 8, etc.).

For example:

- The decimal number 5 is represented in binary as 101 (1*4 + 0*2 + 1*1).

- The decimal number 12 is represented in binary as 1100 (1*8 + 1*4 + 0*2 + 0*1).

Computers use binary code because it can be easily represented by the on/off states of electronic switches, such as transistors. These switches can be in one of two states: on (1) or off (0), mirroring the binary system perfectly.

In addition to representing numbers, binary code is also used to encode characters, instructions, and any other data a computer might need to process. For instance, the letter ‘A’ might be represented by the binary sequence 01000001.

While binary code may seem complex at first, it’s the fundamental language that allows computers to perform the myriad tasks that we rely on them for every day.

Origins and History

Binary code, the fundamental language of computers, has a rich history dating back to ancient times. While the concept of binary numbers, as we understand them today, emerged in the 17th century, the use of binary encoding has roots that stretch far beyond.

- Ancient Numerical Systems: The origins of binary code can be traced back to ancient numerical systems. Early civilizations such as the Egyptians, Babylonians, and Mayans utilized various systems for counting and arithmetic, often based on repetitive patterns or symbols. These systems laid the groundwork for the eventual development of binary encoding.

- Yin and Yang in Ancient China: One of the earliest documented influences on binary concepts comes from ancient China. The concept of Yin and Yang, representing complementary opposites such as light and dark or positive and negative, can be seen as an early binary system. This duality formed the basis for philosophical and mathematical principles.

- Leibniz and the Birth of Modern Binary: In the 17th century, the German mathematician and philosopher Gottfried Wilhelm Leibniz is credited with formalizing the binary system. Inspired by the I Ching, an ancient Chinese divination text based on binary symbols, Leibniz developed a binary numeral system. He recognized that all numbers could be represented using only two digits: 0 and 1.

- Boole’s Algebra: In the 19th century, George Boole developed Boolean algebra, which laid the groundwork for modern digital computer logic. Boolean algebra introduced the concept of logical operations such as AND, OR, and NOT, which could be represented using binary digits. This was a crucial step towards the practical application of binary code in computing.

- Babbage’s Analytical Engine: Charles Babbage, often considered the “father of the computer,” designed the Analytical Engine in the 1830s. While never fully constructed during his lifetime, Babbage’s design incorporated binary principles for data representation and processing. His work laid the foundation for later developments in computing.

- Telegraphy and Early Digital Communication: The practical application of binary code in communication systems began in the 19th century with the invention of telegraphy. Morse code, developed by Samuel Morse and Alfred Vail, used a binary-like system of dots and dashes to represent letters and numbers. This laid the groundwork for modern digital communication.

- Computing Revolution: The mid-20th century saw a revolution in computing with the development of electronic computers. Binary code became the standard language for representing data and instructions within these machines. Innovations such as the binary stored-program architecture, pioneered by John von Neumann, solidified the dominance of binary in computing.

- Evolution of Computing: Since then, binary code has remained at the core of computing systems. Advances in technology have led to increasingly complex and powerful computers, but the underlying principles of binary encoding remain unchanged. From mainframes to smartphones, binary code continues to drive the digital world.

Understanding Binary Code

Binary code is a system used in computers to represent data using only two symbols: 0 and 1. Each digit in a binary number is called a “bit.”

Just like in our everyday decimal system, where each position represents a power of 10 (ones, tens, hundreds, etc.), in binary, each position represents a power of 2 (ones, twos, fours, etc.).

For example, let’s take the binary number 1010.

Starting from the right:

- The rightmost digit represents 0 ones (2^0 = 1).

- The next digit to the left represents 1 two (2^1 = 2).

- The next digit to the left represents 0 fours (2^2 = 4).

- The leftmost digit represents 1 eight (2^3 = 8).

So, when we add these together, we get:

(1 * 8) + (0 * 4) + (1 * 2) + (0 * 1) = 8 + 0 + 2 + 0 = 10.

So, in binary, 1010 represents the decimal number 10.

Binary is fundamental in computing because computers use electronic switches that can be either on (representing 1) or off (representing 0). This binary system allows computers to perform complex calculations and store vast amounts of information.

Binary Code in Computing

Binary code is the fundamental language used in computing systems to represent data and instructions. It consists of only two digits, 0 and 1, known as bits (short for binary digits). Each bit represents a state in a binary system, where 0 typically represents the absence of an electrical signal, and 1 represents the presence of an electrical signal.

Computers use binary code to represent all kinds of information, including numbers, text, images, audio, and video. For example, a single binary digit (bit) can represent two states: off or on, false or true, low or high voltage, etc. By combining multiple bits, computers can represent more complex information.

Binary code is the foundation of all digital computing systems, including microprocessors, memory storage, and communication protocols. Every operation performed by a computer, from simple arithmetic calculations to complex data processing, is ultimately carried out using binary code.

Binary Code Conversion

Binary code is a way of representing information using only two symbols: 0 and 1. It’s fundamental in computer science because computers process data in binary form.

Converting between binary and decimal (base-10) numbers is a common task. Here’s how you can do it:

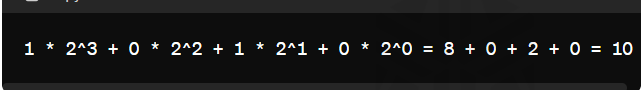

- Binary to Decimal Conversion: To convert a binary number to decimal, you multiply each digit by its corresponding power of 2 and then sum the results. For example, to convert the binary number 1010 to decimal:

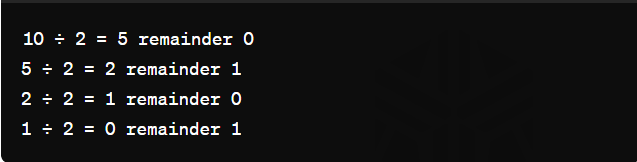

- Decimal to Binary Conversion: To convert a decimal number to binary, you repeatedly divide the decimal number by 2 and record the remainder in reverse order. For example, to convert the decimal number 10 to binary:

These are the basic methods for conversion. There are also many online converters available if you need to convert larger numbers quickly.

Conclusion

Binary code stands as a testament to humanity’s ingenuity and innovation in the realm of information technology. From its humble origins to its pervasive presence in modern computing, binary code continues to shape the way we interact with technology, driving progress and discovery on a global scale.